- Prompt/Deploy

- Posts

- Notes on AI-Integrated Development: The 3 Modes of AI-Integrated Coding

Notes on AI-Integrated Development: The 3 Modes of AI-Integrated Coding

Sharing patterns from personal projects that predict whether AI will help or hurt - after burning countless hours on abandoned code

If you've tried coding with AI tools, you've probably burned hours chasing half-baked suggestions, rewriting messy output, and ending up with code you don't trust. I went through the same spiral. After months of trial and error, I found repeatable patterns that significantly cut wasted time, kept me from shipping broken code, and made the tools genuinely useful.

The breakthrough was recognizing that AI coding happens in three distinct modes, and most people get stuck in exploration mode for everything, burning context and time without realizing it. Once I understood not just the modes but how to actively manage context and switch between them, my acceptance rate jumped significantly.

This is part one of a three-part series on AI-integrated development. In this post, I'll share the foundational framework that determines whether AI will accelerate your work or waste your time. Later parts will cover the safety systems needed for production and the hard-won patterns from months of daily use.

What Is AI-Integrated Development?

AI-integrated development is the practice of incorporating AI language models into your coding workflow as collaborative tools rather than replacement solutions. Unlike traditional development where you write every line, or AI-generated development where you prompt and accept, integration means treating AI as a probabilistic assistant within your deterministic development process.

The "integrated" part is crucial. Instead of using AI occasionally for boilerplate or completely delegating features to AI, it's about understanding when AI assistance helps versus hinders, building systematic safeguards against AI failures, and developing workflows that leverage AI's strengths while mitigating its weaknesses.

In concrete terms, AI-integrated development means:

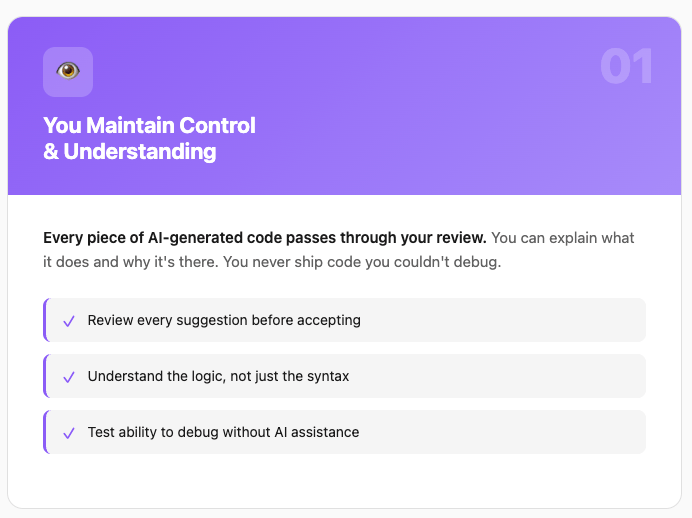

1. You maintain control & understanding

2. You match tools to tasks

3. You engineer context systematically

4. You implement safety layers

AI-integrated development acknowledges that AI tools are probabilistic systems operating in a deterministic environment. They generate statistically likely code, not absolutely correct code. They maintain conversational context, not true understanding. They provide suggestions, not solutions.

This differs from the marketing vision of "AI pair programming" where AI is portrayed as a knowledgeable colleague. In reality, it's more like working with an extremely fast but unreliable intern who has memorized Stack Overflow but doesn't understand your specific codebase, makes confident mistakes, and occasionally suggests storing credit cards in localStorage.

Many tools exist nowadays to facilitate AI-integrated development. Whether using Claude Code, Cursor, Copilot, or Windsurf, these tools share characteristics that change how code gets written:

1. Conversational context as primary state

2. Probabilistic output

3. Multiple interaction surfaces

4. Natural Language Ambiguity

Understanding these shared characteristics matters more than tool comparison. The patterns apply whether using Claude's latest model or Cursor.

Three Modes of AI-Assisted Development

After watching myself burn tokens on abandoned code, I identified three operational modes: exploration, execution, and refinement. Recognizing which mode you're in—and whether AI helps or hinders—determines effectiveness.

Mode 1: Exploration (20-30% useful)

What it is: Figuring out what to build while building it. Debating architectures, comparing approaches, discovering unknowns.

Real example: Four hours exploring dashboard architecture with AI. Generated three complete implementations (Server Components, React Query, hybrid). Shipped none. The constant pivots felt productive but produced zero usable code.

Recognition markers:

"Actually, what if..." pivots

Multiple approach changes

Most code abandoned

Context resets every few messages

Reality: Exploration mode is the default trap. Most developers get stuck here for everything, burning context and time. It rarely produces shippable code. It occasionally clarifies genuine unknowns but mostly wastes time reconsidering settled decisions. Developers think they're executing but without clear requirements, they're just exploring with extra steps.

Exploration is valuable for learning—terrible for shipping. If your goal is education, explore freely. If you're on deadline, exploration is procrastination with extra steps. Know your goal before starting the session.

Mode 2: Execution (60-70% useful)

What it is: Building what you already know. Clear requirements, established patterns, seeking speed.

Real example: "Create a React component for a metrics card. Props: title (string), value (number), change (number), changeType ('increase' | 'decrease'). Use Tailwind classes. Include proper TypeScript types. Add loading and error states."

Claude delivered functional code in one attempt:

interface MetricsCardProps {

title: string;

value: number;

change: number;

changeType: 'increase' | 'decrease';

loading?: boolean;

error?: Error | null;

}

export function MetricsCard({ title, value, change, changeType, loading, error }: MetricsCardProps) {

// Clean implementation following requirements

}

No philosophical discussions, no alternatives, just implementation matching specifications.

Recognition markers:

Specific input-output requirements

Single-attempt success common

Minimal back-and-forth

"Build this" not "should we?"

Best for: Boilerplate, CRUD operations, test structures, applying consistent patterns. Saves 20-40% on initial writing.

Execution Mode Optimization: Custom Commands

Instead of writing ad-hoc prompts, get in the habit of building a library of custom commands for repeated patterns. Custom commands enforce execution mode discipline. They prevent drift into exploration because they require predefined patterns.

// Component generation pattern

"Create a [component type] with props: [prop list], using [style system], include [standard states]"

// CRUD operation pattern

"Generate [operation type] for [entity] with fields: [field list], following [project pattern]"

// Test structure pattern

"Write [test type] for [function/component] covering: [test scenarios], using [test framework]"

// API endpoint pattern

"Implement [HTTP method] endpoint for [resource] with validation: [rules], returning [response shape]"Store these as text snippets, IDE templates, or even markdown files. The format doesn't matter—the discipline of using consistent patterns does. In execution mode, similar inputs should produce similar outputs. If the AI generates wildly different code for similar requests, you've accidentally shifted to exploration. Reset and clarify requirements.

Mode 3: Refinement (50% useful)

What it is: Improving working code without reimagining it.

Real example: "This table re-renders on scroll. Add virtualization using react-window. Keep existing sorting and filtering."

AI made surgical changes—integrated library, added memoization, preserved functionality. No architectural debates.

Recognition markers:

Tests must still pass

Bounded scope (<50 lines)

Clear success criteria

Preserve existing behavior

Success factors: Specificity and existing tests. Without boundaries, refinement becomes exploration.

Progressive Enhancement Pattern

Refinement involves progressive enhancement through test-driven iterations:

Baseline: Get tests passing (first refinement)

Enhancement: Add performance optimizations (second refinement)

Polish: Improve readability/types (third refinement)

Each pass builds on the last, using test results to drive the conversation:

"Tests pass. Now optimize the sort function—current benchmark: 2.3ms"

[AI generates optimization]

"New benchmark: 0.8ms, tests still pass. Now add TypeScript generics"

Diff-Based Conversations: Don't paste entire files. Speak in deltas:

"Change lines 42-47 to use early return"

"Replace the

forEachwithreduce, keeping everything else"Share actual git diffs:

git diff HEAD~ -- component.tsx

The key insight: Most developers unconsciously drift between modes, exploring when they should execute, executing when they need to explore. Once you recognize your mode, you can either lean into it or reset. Exploration beyond 20 messages is probably a waste. Execution without clear requirements is just hidden exploration. Refinement without tests is gambling.

Methods & Definitions

These definitions provide concrete, measurable criteria for tracking AI coding sessions. Without clear definitions, terms like "success" or "context degradation" become meaningless.

Reset: new conversation or context reload

A reset occurs when you either start a completely new conversation (new chat window, new terminal session) or explicitly clear the context within an existing session (using commands like /clear or Reset context). This also includes situations where you paste the same requirements into a fresh session because the current one has degraded. Resets indicate context failure. If you're resetting frequently, you're either in the wrong mode or your requirements aren't clear.

Acceptance: merged with ≤10 LoC edits by a human

Acceptance means you used the AI-generated code with minimal human modification—specifically, 10 lines or fewer changed. This counts actual functional changes, not formatting or variable renaming. If you had to rewrite core logic, add error handling the AI missed, or fix fundamental misunderstandings, that's not acceptance.

Example: AI generates a 50-line React component. You fix two typos, adjust one condition, and rename a variable. Total: 4 lines changed. This counts as accepted. Another session: same component, but you rewrite the state management logic (15 lines) because the AI misunderstood requirements. Not accepted.

Why 10 lines? It's the threshold where review time exceeds rewriting time. Below 10 lines, you're editing. Above 10 lines, you're essentially rewriting with the AI's code as reference.

Mode switch triggers

When do you switch between exploration, execution, and refinement modes? These are circuit breakers preventing wasted time.

Exploration → Execution when acceptance ≥60% across two generations

When exploring, you're looking for approach validation. If the AI generates something and you accept 60% or more of it (need to change fewer than 40% of the lines), and this happens twice in a row, you've found your pattern. Stop exploring. Switch to execution mode with those validated patterns.

Example: Exploring state management approaches. First generation suggests Zustand with specific patterns—you'd use 70% as-is. Second generation implements a feature using those patterns—again 65% usable. Stop exploring Zustand alternatives. You've found your approach.

Execution → Exploration if ≥2 regenerations fail or scope expands

In execution mode, you expect immediate usable output. If you need to regenerate more than twice (the AI isn't understanding your requirements) or the scope suddenly expands ("wait, we also need to handle offline state"), you're no longer executing—you're exploring.

Example: "Add error handling to this endpoint." First generation misses the point. Second generation is still wrong. Stop. Either:

Your requirements are unclear (explore what you actually need)

Or the AI doesn't understand the context (explore how to explain it better).

Any → Refinement when a failing test exists and target diff ≤50 LoC

Refinement mode requires boundaries. A failing test provides a clear success criterion. The 50 LoC limit prevents scope creep. If you're changing more than 50 lines, you're not refining—you're rewriting.

Example: Test shows performance regression in a data transformation function (20 lines). This is perfect for refinement. Contrast: "Refactor this entire module" (200+ lines, no tests)—this isn't refinement, it's exploration of a new architecture.

Why these specific thresholds?

They emerged from analyzing sessions where I felt productive versus frustrated:

60% acceptance: Below this, you're fighting the AI more than using it

2 regenerations: Third time is never the charm with AI—context has failed

50 LoC for refinement: Beyond this, cascading changes multiply error probability

20 messages for exploration: Attention and context meaningfully degrade after this

Track your own sessions against these thresholds. You might find different numbers for your workflow, but having concrete triggers prevents the "just one more prompt" trap that wastes hours.

Execution mode works best for well-defined, repetitive tasks.

Exploration mode rarely produces usable code but occasionally provides valuable insights.

Refinement mode requires existing tests as guardrails. Without clear requirements or tests, AI assistance often creates more work than it saves.

AI Task Decision Tree

This decision tree routes any coding task through a series of filters to find its optimal approach.

Start with the critical security check—if you're touching authentication, payment processing, or security-critical code, distinguish between core logic (human only) and supporting code. Core security logic like auth flows, token generation, and encryption should never be AI-generated. Supporting code like tests, documentation, and input validation can use AI with extreme caution and line-by-line review.

1. Security check

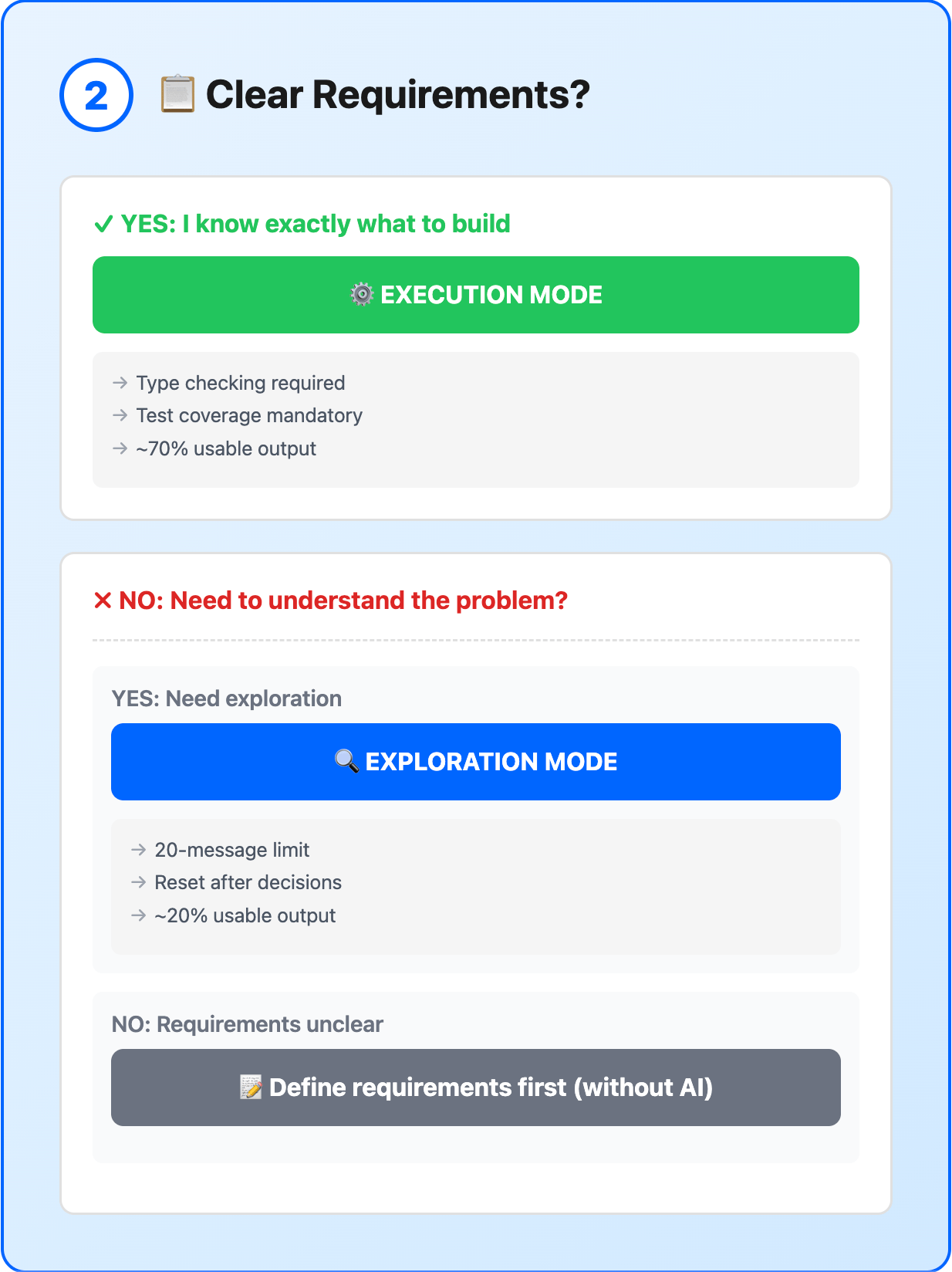

For everything else, assess whether you have clear requirements. Clear requirements route to Execution Mode where AI can efficiently implement defined patterns. Unclear requirements trigger a second check: do you need to understand the problem (Exploration Mode with a 20-message limit) or do you need to define requirements first without AI?

2. Clear requirements?

The final branch handles existing code more realistically. Failing tests with bounded changes work well for Refinement Mode. Working code that needs changes requires assessment—refactoring with tests can use Refinement Mode, feature additions return to the requirements check, and performance optimization needs benchmarks first (since AI often makes performance worse).

3. Modifying existing code?

Each path includes specific guardrails—type checking for execution, message limits for exploration, diff size limits for refinement—that prevent common failure modes.

Operational Techniques by Mode

Mode-switching is a problem. You start intending to execute, hit one ambiguity, and unconsciously drift into exploration. Three hours later, you've explored six approaches and shipped nothing.

The fix: Make mode declaration explicit. Before starting, write down: "I am in [MODE] mode." If you can't decide, you're in exploration—set that 20-message limit immediately.

Each mode requires specific operational patterns:

Exploration Mode Operations

Set 15-message timer for proactive context reset

Save discoveries externally before clearing context

Open multiple parallel sessions for different approaches

Accept that you're learning, not shipping

Execution Mode Operations

Start with pristine context

Use custom commands, not ad-hoc prompts

Expect predictable outputs for similar inputs

Batch similar tasks in one session

If output surprises you, you're not in execution mode

Refinement Mode Operations

Lead with the failing test output

Speak in diffs, not files

Use progressive enhancement cycles

Let test results drive the conversation

Commit after each successful refinement pass

Key Takeaways

Understanding these three modes and when to use each is the foundation of effective AI-integrated development. The core problem is that people get stuck in exploration mode for everything, burning context and time without realizing they're exploring, not executing. Make mode selection conscious and use active context management, custom commands, diff-based conversations) to maintain effectiveness.

The metrics and thresholds I've shared aren't prescriptive—they're starting points. Track your own sessions, find your own thresholds, but most importantly, be conscious of which mode you're in and whether it matches your task.

In the next part, I'll dive into the four layers of risk mitigation that make AI-generated code safe for production. We'll explore how to engineer context that prevents disasters, select the right interface for each task, calibrate trust based on risk, and handle the implications of scaling these patterns to a team.

For now, remember: AI coding tools aren't magic. They're pattern matchers with no understanding of your specific context. Use them in the right mode, with clear boundaries, and they can accelerate your work. Use them blindly, and they'll multiply your problems.

Reply