- Prompt/Deploy

- Posts

- Notes on AI-Integrated Development: The 4-Layer Defense System That Stops AI From Shipping Broken Code

Notes on AI-Integrated Development: The 4-Layer Defense System That Stops AI From Shipping Broken Code

Context engineering, interface selection, safety gates, and scale-ready patterns

In Part 1, I showed you the three modes of AI coding and how to recognize which one you're in. But knowing when to use AI is only half the battle. The other half is making sure AI doesn't ship disasters to production.

After my AI assistant tried to store passwords in localStorage, suggested JSON.parse without try-catch in payment processing, and confidently hallucinated Next.js APIs that didn't exist, I realized: AI tools will happily generate code that looks professional but violates every security principle you hold dear. They'll create race conditions with confidence, leak memory with style, and pass your syntax checks while failing your users.

This is part two of my series on AI-integrated development. Here, I'll share a four-layer defense system - each layer addresses a specific category of risk that emerges when integrating probabilistic tools into deterministic workflows. Together, they've taken my AI-generated code from "never trust" to "trust but verify" to, occasionally, "trust for this specific use case."

Four Layers of Risk Mitigation

Each layer addresses a specific category of risk that emerges when integrating probabilistic tools into deterministic workflows.

The 4 Layers of AI Integration

Layer 1: Context Engineering

AI assistants have no inherent understanding of your project's constraints, decisions, or anti-patterns. Without explicit context engineering, they'll confidently violate architectural principles, suggest deprecated patterns, and solve problems you've already decided against. Context engineering means building explicit, evolving documentation that trains the AI on your specific requirements and failures.

Layer 1: Context Engineering

How it manifests

Configuration files that grow from project-agnostic suggestions to highly specific rules. Documentation that explains not just what your architecture is, but why certain alternatives were rejected. Rules that encode lessons from every AI-generated failure.

In practice with .cursorrules:

Week 1 (naive optimism):

You are an expert TypeScript/Next.js developer

Write clean, modern code

Follow best practices

Use functional components with hooks

Month 1 (post-disasters):

# Core Rules

- NEVER install new npm packages without explicit approval

- ALWAYS use existing project patterns - check similar files first

- DO NOT refactor unrelated code when fixing bugs

- Keep changes minimal and focused on the task

# React/Next.js Specific

- Use the existing QueryClient setup, don't create new instances

- Server components by default unless useState/useEffect needed

- Keep 'use client' directives at the component file level, not in parents

- Use the project's existing error boundary pattern

# File Structure

- Components go in /components with [ComponentName]/index.tsx structure

- API routes follow existing /api/[resource]/route.ts pattern

- Don't create new folders without asking

Month 3 (scarred veteran):

# FORBIDDEN - These caused production incidents

- NEVER touch anything in /app/api/auth/* - manual changes only

- NEVER store sensitive data in localStorage, sessionStorage, or cookies

- NEVER use JSON.parse without try-catch

- NEVER remove existing error boundaries

- NEVER change database schema or migrations

- NEVER modify .env variables or configuration files

# State Management - Stop suggesting alternatives

- Server state: ONLY use @tanstack/react-query v5 with existing setup

- Client state: ONLY use Zustand stores in /lib/stores

- Form state: ONLY use react-hook-form with zod validation

- DO NOT suggest Redux, Context API, Valtio, Jotai, or MobX

- DO NOT create new providers or contexts

# Component Rules

- Extend existing components from /components/ui instead of creating new ones

- Use cn() utility for className merging, not template literals

- All components must have explicit TypeScript interfaces

- Loading states use <Skeleton /> component, not custom spinners

- Error states use <Alert /> component with destructive variant

# Data Fetching - Follow existing patterns exactly

- Pages: Use generateStaticParams + fetch in page components

- Client: Use custom hooks from /hooks that wrap react-query

- Mutations: Always include optimistic updates and rollback

- Never use useEffect for data fetching

- Always handle loading, error, and empty states explicitly

# Performance - Previous optimization attempts that backfired

- Don't add memo/useMemo/useCallback without profiler proof

- Don't lazy load components under 10KB

- Don't virtualize lists under 100 items

- Keep bundle chunks as configured in next.config.js

# Testing

- Test files use .test.tsx extension and sit next to component

- Use existing test utilities from /test/utils

- Don't mock what you don't own

- Integration tests over unit tests for API routes

# When you see these patterns, leave them alone

- // @ts-ignore comments are intentional workarounds

- Multiple setState calls in event handlers are batched

- Prop drilling in certain areas is intentional to avoid context overhead

- Some components use classes for Tailwind purge compatibility

# Common hallucinations to avoid

- Next.js doesn't have useServerState or getServerSession hooks

- There's no built-in way to share state between server and client components

- App Router doesn't support _app.tsx or _document.tsx

- generateMetadata can't access hooks or context

The progression from "write clean code" to "never touch auth" shows the reality of AI integration—every specific rule emerged from a specific failure. The Month 3 version includes sections on common hallucinations because I noticed patterns in what AI consistently gets wrong about Next.js App Router.

The Context Progression Reality

Generic (Week 1): Copy-pasted from tutorials, mostly useless

Curated (Month 1): Filtered through actual failures, somewhat effective

Personalized (Month 3): Battle-tested rules specific to my patterns and mistakes

Each stage required failures to reach the next. No shortcuts exist.

Layer 2: Interface Selection

Every interface to AI provides different context access and action capabilities. Success depends on matching your task's context needs with what the interface can actually see and do. It’s important to understand the fundamental trade-offs each interface type makes.

Layer 2: Interface Selection

Core questions to evaluate any interface

1. Context Access

2. Actions

3. Context Accumulation

In practice, this means:

Assess your task's context requirements first. A simple function needs local context. A refactor needs project-wide understanding. Architecture decisions need conceptual space without code noise.

Match interface to context needs. If your task requires understanding relationships across files, you need an interface with project access. If you need clean conceptual thinking, you need an interface you can reset. If you need to verify changes immediately, you need an interface that can execute code.

Recognize context degradation patterns. Every interface accumulates context differently. Some pollute quickly with conversation history. Others lose track of which files changed. Some can't distinguish between your requirements and their previous suggestions. Understanding your specific tool's degradation pattern prevents wasted time.

The key insight: Interface capabilities determine outcomes more than prompt quality. The best prompt in the wrong interface produces worse results than an average prompt in the right interface. Know what your tools can see and do before choosing where to type your request.

Here's an example with Claude Code to illustrate interface selection

The Task: Add comprehensive error handling to 5 API endpoints across 3 files, including logging, status codes, and user-friendly messages.

Option 1: Using Claude.ai (chat interface)

You: "Here's my API endpoint code [paste 200 lines]. Add error handling."

Claude: [Provides complete rewritten code]

You: "Great, here's the next endpoint [paste 150 lines]..."

What Claude.ai can access:

Only the code you explicitly paste

Previous conversation context (getting polluted with each paste)

No understanding of your error handling in other files

Result: Five different error handling patterns, inconsistent with the rest of your codebase, no knowledge of your existing error utilities.

Option 2: Using Claude Code (terminal interface)

You: "Add consistent error handling to all endpoints in /api/routes using our existing AppError class"

Claude Code: [Reads all files, finds AppError pattern, applies consistently]

What Claude Code can access:

Your entire codebase via file system

Your existing AppError class in /utils/errors.js

All 5 endpoints across 3 files simultaneously

Your .eslintrc showing error handling conventions

Git history showing how errors were handled before

Actions Claude Code can take:

Modify all 5 endpoints in one operation

Run your tests to verify changes

Create a git commit with appropriate message

Show you a diff before applying

Result: Consistent error handling matching your existing patterns, using your AppError class, respecting your logging setup.

The Critical Difference: With Claude.ai, you worked harder (copy-paste everything), got worse results (inconsistent patterns), and took longer (sequential conversation).

With Claude Code, the tool's ability to read your codebase meant it could find and match your existing patterns without you explaining them. Its ability to modify multiple files meant consistent changes. Its git integration meant easy rollback if needed.

Key Learning: The prompt "Add error handling" was identical. The outcomes were completely different. Claude Code succeeded not because it's "better" but because this task required project-wide context and multi-file modifications—capabilities the terminal interface provides but chat doesn't.

This is why understanding interface capabilities matters more than perfecting prompts.

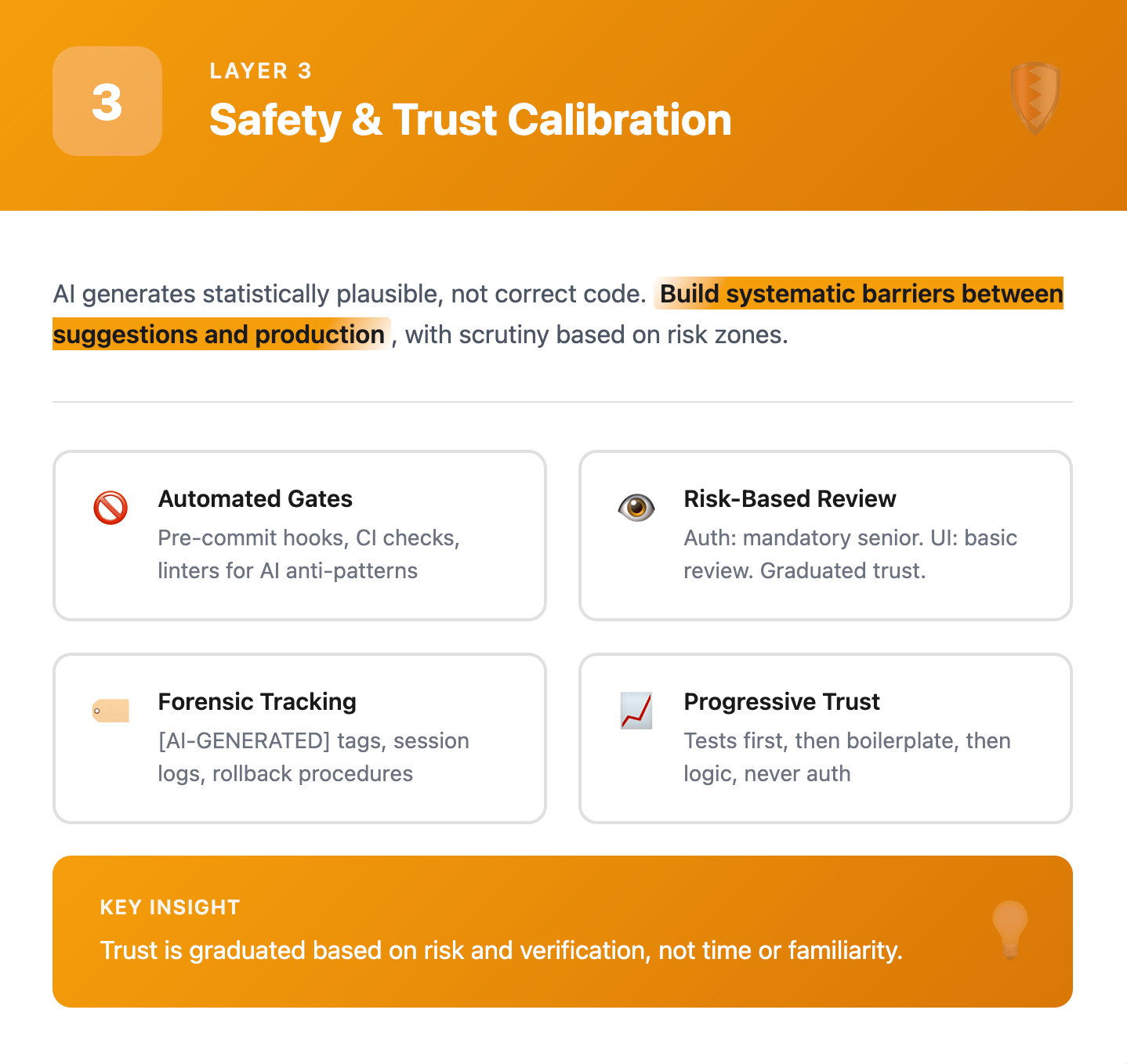

Layer 3: Safety and Trust Calibration

AI generates statistically plausible code, not absolutely correct code. It will confidently produce solutions that look professional but violate security principles, introduce race conditions, or leak memory—all while passing basic syntax checks. Safety means building systematic barriers between AI suggestions and production code, with different levels of scrutiny based on risk.

Layer 3: Safety & Trust Calibration

How it manifests

Automated gates that catch known patterns:

Pre-commit hooks blocking localStorage usage in payment code

CI checks failing when test coverage drops after AI changes

Linters configured to catch common AI anti-patterns

Build processes that reject new dependencies without documentation

Human review requirements based on risk zones:

Authentication code: Mandatory senior review, no exceptions

Database migrations: Review plus staging environment testing

Business logic: Standard PR review with extra scrutiny

UI components: Basic review often sufficient

Documentation: Light review for technical accuracy

Forensic capabilities for when things go wrong:

Git commits tagged with [AI-GENERATED] for blame tracking

Session logs showing exact prompts and responses

Diff history showing what AI changed versus human edits

Rollback procedures specifically for AI-introduced bugs

Progressive trust building through verification:

Start with AI generating tests, not implementation

Graduate to boilerplate only after tests pass

Allow business logic changes only with comprehensive test coverage

Never full autonomy for security-critical paths

The key insight: Trust is graduated based on risk and verification. You might trust AI completely for generating test fixtures, partially for CRUD operations, minimally for business logic, and not at all for authentication. Each level requires different safety mechanisms, from automated checks for low-risk changes to mandatory human review for critical paths.

Layer 4: Scale Implications

AI coding patterns developed in isolation create multiplicative problems at team scale. Every developer's different AI workflow creates competing conventions, inconsistent outputs, and review bottlenecks. The flexibility that helps individual productivity becomes chaos when five developers use five different approaches.

Layer 4: Scale Implications

How it manifests

Configuration fragmentation:

Each developer maintains different

.cursorrulesbased on their pain pointsOne developer's "never use hooks" conflicts with another's "always use hooks"

AI generates different patterns for the same requirements based on who asks

Project-wide conventions get overridden by personal configurations

Knowledge silos from conversational context:

Developer A spends hours training AI on architectural decisions

Developer B starts fresh, AI suggests contradicting architecture

Critical context exists only in individual chat histories

Same questions get answered differently for each team member

Review friction from inconsistent AI patterns:

PR comments devolve into "why did the AI do it this way?"

Senior developers reject AI patterns junior developers accept

Time spent debating AI suggestions exceeds time saved generating code

Trust erodes as team members question each other's AI judgment

Tool migration impossibility:

Team investment in Cursor-specific workflows

Switching to Claude Code means retraining everyone

Custom commands and patterns don't transfer

Vendor lock-in through workflow dependency, not just code

My scaling failures:

Three projects with identical needs, three different command names:

generate-component(remembered this one)make-react-component(forgot about this one)create-ui-element(definitely forgot this existed)

Each time I switched projects, I'd recreate the same functionality with a different name because I couldn't remember what I'd called it before. Multiply this by five developers, and you have 15 different commands doing the same thing.

Worse: context pollution. I'd explain our authentication architecture to Claude in Project A, then re-explain the same architecture differently in Project B, leading to subtly different implementations of the same patterns. The AI has no memory between sessions, so every conversation starts from zero, and every developer explains things differently.

The compounding effect: With five developers, you get 25x the problems. Every developer's personal patterns interact with every other developer's patterns. Code reviews become archaeological expeditions through layers of different AI conventions. The codebase develops multiple personalities, each reflecting how different developers trained their AI assistants.

Vendor lock-in reality: When Cursor adds a new feature, the team reorganizes workflows around it. When Claude Code offers better context handling, switching means retraining everyone. The investment isn't in code—it's in muscle memory, commands, configurations, and workflows that evaporate when tools change.

Critical Safety Warnings

Before implementing any of these patterns, understand what should never touch AI:

Never use AI-generated code for:

Authentication or authorization systems

Payment processing or financial calculations

Cryptographic implementations

Medical or safety-critical systems

Personally identifiable information handling

Compliance-regulated code (HIPAA, PCI, SOX)

Prerequisites for Safe Usage

You need:

Deep understanding of your language and framework (to catch subtle errors)

Time budget for reviewing every line of generated code

Comprehensive test coverage already in place

Established security review processes

Willingness to reject 70-80% of suggestions

Without these, AI tools multiply risk rather than productivity.

Enterprise Reality Check

If you're in a regulated environment:

Your AI usage might already violate policy

"Approved" tools doesn't mean "approved usage"

Security teams rarely understand AI tool risks

Compliance auditors definitely don't

Check before you lose your job over a productivity hack.

When NOT to Use AI Tools

Contexts where AI makes things worse:

Time-critical production fixes without a failing test. Debugging AI suggestions under pressure leads to compound failures.

Novel algorithm development requiring first-principles design. AI rehashes training data patterns. For genuinely new approaches, it actively misleads.

Performance-critical paths without a benchmark harness. AI suggestions tend toward readable, general solutions.

Exploratory debugging when the failure mode is unknown. When you don't understand the bug, AI multiplies confusion. It pattern-matches symptoms to wrong causes.

Deterministic systems requiring reproducible outputs. AI output varies between sessions. Same prompt, different day, different code. Learned this building a parser that needed consistent output.

Data Privacy Reality

By default, code sent to AI services may be retained or logged. If you haven't explicitly enabled retention-off or are not using self-hosted inference, treat anything non-public as sensitive.

Unless you have explicit retention-off agreements, on-prem inference, or a vetted proxy, assume code you send may be retained or logged. Treat anything non-public as sensitive and scrub before sharing.

Privacy checklist:

Use a broker/proxy to strip secrets

Enforce allow-lists on files shared with tools

Default to retention-off providers or self-hosted for sensitive code

Building These Layers in Practice

Implementing these four layers doesn't happen overnight. Start with the most critical for your situation.

If You're a Solo Developer

Focus: Layer 1 (Context Engineering) + Layer 3 (Safety Gates)

Working alone means you're both the generator and reviewer of AI code. You need rules that prevent repeated mistakes and basic safety checks since there's no team to catch your errors.

Start by building a context file that encodes every lesson learned. When AI generates a useState inside a server component, add a rule. When it suggests localStorage for sensitive data, add a rule. Your context file becomes your external memory.

Simultaneously, implement basic safety gates. A pre-commit hook that blocks common AI antipatterns. A simple script that fails if test coverage drops. You can't review your own code objectively at 2 AM—automation must be your backup.

If You're on a Team

Focus: Layer 4 (Scale Implications) FIRST

This might seem counterintuitive, but team chaos compounds exponentially. Five developers creating their own patterns means 25x the problems. You must establish shared conventions before everyone develops their own.

Start with a team-wide context file in your repository. Agree on which tools you'll use for which tasks. Document your AI workflow in your contributing guide. Create shared commands that everyone uses the same way.

If You're in Production

Focus: Layer 3 (Safety & Trust) - Non-Negotiable

Production systems have real users and real consequences. One AI-generated security hole can end careers. One corrupted database from hallucinated SQL can destroy customer trust.

Your first priority is comprehensive safety gates. Mandatory review for any AI-touched authentication code. Pre-commit hooks that block AI from modifying payment processing. Git commits tagged with [AI-GENERATED] for forensic analysis when something breaks.

Only after safety is established should you worry about productivity optimizations. It's better to be slow and safe than fast and fired.

If You're Learning AI Tools

Focus: Layer 2 (Interface Selection)

Most frustration with AI tools comes from using the wrong interface for the task. You paste code into ChatGPT and wonder why it can't see your file structure. You use Cursor for exploration and wonder why the context degrades.

Start by understanding what each tool can actually see and do. ChatGPT sees only what you paste. Cursor sees your whole project. GitHub Copilot sees open tabs. Each has different context windows, different capabilities, different sweet spots.

Spend a week using only one interface, then switch. You'll quickly learn that interface capabilities determine outcomes more than prompt quality. The best prompt in the wrong tool produces worse results than an average prompt in the right tool.

The Reality of Defense in Depth

No single layer is sufficient. Context engineering without safety gates means sophisticated disasters. Safety gates without proper interface selection means you're protecting against the wrong risks. Scale patterns without context engineering means consistent chaos instead of individual chaos.

These layers work together:

Context engineering teaches AI what not to break

Interface selection ensures AI has the right information

Safety gates catch what context engineering missed

Scale patterns prevent team-level degradation

The goal isn't perfection—it's making AI assistance net-positive despite its fundamental unreliability.

Key Takeaways

These four layers emerged from painful experience, not theory. Every rule in my context file represents something that went wrong. Every safety gate exists because something slipped through. Every scale consideration comes from watching team dynamics degrade.

The progression from "AI will revolutionize coding" to "never let AI touch auth" is universal. Everyone starts optimistic and ends up with defense systems. The only question is whether you build these defenses proactively or after disasters.

In the final part, I'll share the specific anti-patterns that waste the most time, the uncomfortable truths about AI development that marketing won't tell you, and the patterns that actually work once you accept what these tools really are—fast but unreliable assistants that need constant supervision.

For now, remember: AI tools are probability machines operating in a deterministic world. They don't understand your code, your constraints, or your consequences. Build your defenses accordingly.

Reply