- Prompt/Deploy

- Posts

- Notes on AI-Integrated Development: Context Hoarding, Tool Thrashing, and 6 Other AI Coding Mistakes I Make Weekly

Notes on AI-Integrated Development: Context Hoarding, Tool Thrashing, and 6 Other AI Coding Mistakes I Make Weekly

Plus the uncomfortable truths about AI development that marketing won't tell you

In Parts 1 and 2, I showed you the three modes of AI coding and the four-layer defense system that keeps AI from shipping disasters. But even with perfect frameworks and safety systems, I still make the same mistakes every week. Some patterns are so seductive, so seemingly logical, that I fall into them repeatedly despite knowing better.

This final part is about the messy reality of AI development—the anti-patterns that waste hours, the context degradation that sneaks up on you, and the uncomfortable truths that become clear only after months of daily use. I'll also share what actually works once you accept what these tools really are: fast but unreliable assistants that occasionally surprise you with brilliance but mostly need constant supervision.

Anti-Pattern Catalog

Mistakes I've made repeatedly, each seeming reasonable at the time.

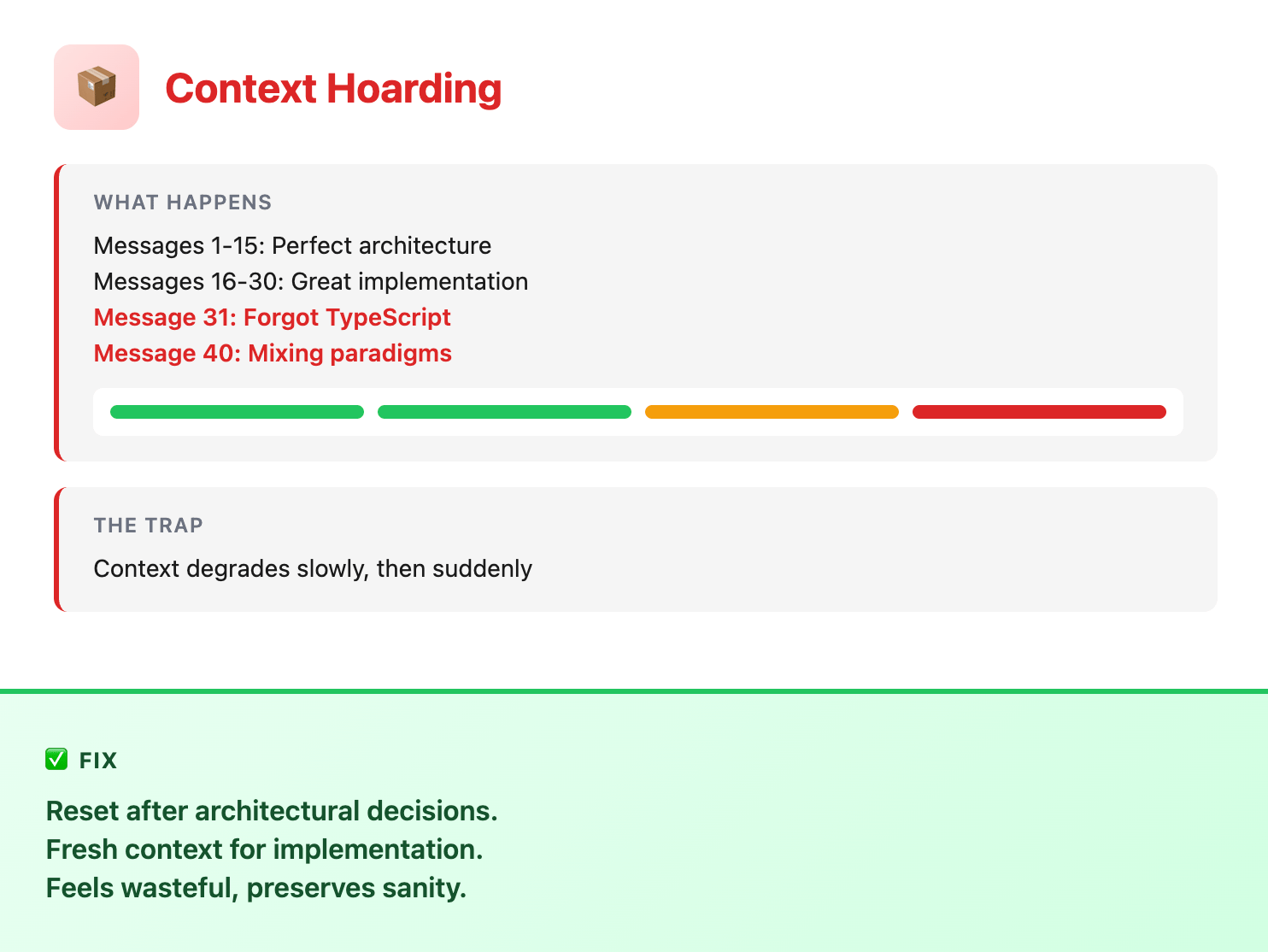

Context Hoarding

Anti-Pattern: Context Hoarding

Tool Thrashing

Anti-Pattern: Tool Thrashing

The Solo Artist

Anti-Pattern: The Solo Artist

Complexity Creep

Anti-Pattern: Complexity Creep

Hallucination Whispering

Anti-Pattern: Hallucination Whispering

Context Degradation: What It Looks Like & What to Do

Context degradation happens predictably but announces itself subtly. Every probabilistic model degrades with context length—it's fundamental to how transformers work. Newer models degrade more gracefully but still degrade.

Context Degradation Warning Signs

Test it yourself: have a 40-message conversation about architecture. Messages 35-40 would probably contradict messages 1-5.

Context degradation is something to actively manage. Each mode requires different context strategies. Here are some ideas:

Exploration Mode: High context accumulation is inevitable. Set a timer for 15 messages, then proactively reset the context (e.g.,

/clearif using Claude Code) before hitting the 20-message degradation point. Save valuable discoveries externally before clearing.Execution Mode: Keep context pristine. Each task should start fresh. Reset context between unrelated tasks even if context isn't degraded. Clean context produces predictable outputs.

Refinement Mode: Use diff-based conversations. Instead of pasting entire files, share:

The specific failing test

The exact diff you want

The git diff output as context

Pro tip: If you're not resetting context at least once per session in exploration, you're probably accumulating dead context that confuses the AI.

Patterns I've Observed

Not rigorous metrics—patterns from usage:

First-Generation Usefulness

Execution mode with clear specs: ~60-70% usable

Exploration mode: ~20-30% usable

Refinement mode: ~50% usable

"Usable" means requiring less than 10 minutes of fixes. Most suggestions need some adjustment.

Session Efficiency Patterns

Exploration beyond 20 messages rarely adds insights—conversation becomes circular.

Execution beyond 10 messages means requirements weren't clear or you're actually exploring.

Refinement follows pattern: identify, fix, verify, move on.

Time Impact Reality

Routine boilerplate: 20-40% faster to write, similar time overall after review

Complex business logic: Usually slower (exploration overhead + debugging)

Bug fixing with failing test: Sometimes faster

Bug fixing without test: Often makes things worse when failure mode is misunderstood

Performance optimization: Usually makes things worse initially

Building for Future Scale

Even working solo, I structure projects assuming someone else (probably me in six months) will inherit this mess:

The AI_CONTEXT.md file

Lives in project root, explains what the AI shouldn't break:

## Project Overview

[One paragraph explaining what this does]

## Key Architecture Decisions

- Why we use pattern X not Y

- Why we avoid technology Z

- Performance vs. maintainability tradeoffs

## Conventions

- Naming patterns

- Error handling approach

- Testing philosophy

## Anti-patterns

- What not to do and why

## AI Tool Configuration

- Which tools used and why

- Specific version dependencies

- Custom commands and their purposes

## Known AI Failures

- Historical AI mistakes to watch for

- Common hallucinations in this codebase

Self-documenting commands

YAML forces clarity:

name: generate-component

description: Creates React component with tests

parameters:

- name: Component name (PascalCase)

- props: prop:type pairs

template: |

Create a React component with:

- TypeScript interfaces

- Tailwind styling

- Loading and error states

- Unit tests

Can't create a mysterious gen-cmp command when you have to write out what it actually does.

Repository structure that makes AI configuration visible:

project/

├── .cursorrules (what AI can't touch)

├── .ai/

│ ├── commands/ (reusable prompts)

│ ├── templates/ (code patterns)

│ └── conventions.md (team agreements)

└── docs/

└── ai-patterns.md (lessons learned)

Simple git hooks:

# If AI touches auth/payment/security directories, stop

if git diff --cached --name-only | grep -E "(auth|payment|security)/"; then

echo "⚠️ AI changes in sensitive directories"

exit 1

fi

The point here is to make AI's involvement visible and reversible. Future-you (or your teammate) should understand what AI touched, why it was allowed to touch it, and how to undo it when things go wrong.

Emerging Patterns: MCP Servers

Starting to experiment with Model Context Protocol servers for automated context. Early observations:

Good for repetitive information gathering when context is static and bounded (schemas, routes, feature flags)

Terrible for nuanced architectural decisions

Another layer that can break

Avoid MCP for evolving architectural decisions. Too early to recommend, but worth watching if you're hitting context limits repeatedly.

Potential Next Steps

Pattern Mining from Failures

The logical next step would be extracting patterns from logged sessions:

Every hallucination could become a new rule

Every wasted exploration session could refine mode recognition

Every context degradation could adjust reset triggers

Potential tools worth building:

Script to analyze Claude/Cursor logs for repeated mistakes

Automated rule generator from failure patterns

Context degradation detector

The concept is simple—systematically learning from the mistakes that keep happening. Whether anyone actually builds these tools depends on whether the manual pain exceeds the automation effort.

Toward Measurement

Another natural next step would be quantifying the following:

Potential metrics worth tracking:

Session resets and acceptance rates

Token efficiency (how much generated code actually ships)

Mode transition patterns (when people switch from exploration to execution)

Context degradation indicators

Tools that could provide insight:

Session analyzers to identify productive vs. wasteful patterns

Token calculators to measure exploration overhead

Pattern recognition to spot mode mismatches

Degradation detection to know when to reset

The goal would be understanding these tools well enough to use them responsibly at scale. With enough tracked sessions, we could move from anecdotes to actual data about what works and what doesn't.

Whether anyone builds these tools depends on whether the value of precise measurement exceeds the effort of tracking. For now, rough heuristics and pattern recognition seem sufficient for most developers.

Uncomfortable Truths

These tools amplify existing habits. My tendency toward over-engineering? Amplified. AI eagerly helps build elaborate solutions to simple problems. Like pair programming with someone who never says "maybe that's too complex."

Muscle memory fights change. Still reaching for traditional autocomplete. Still typing boilerplate while AI waits. Decade of habits doesn't vanish in three months. Sometimes I catch myself manually typing boilerplate while Claude watches, ready to help.

Solo habits become team debt. Every undocumented command, every project-specific configuration—tomorrow's confusion. Every shortcut I take, every undocumented command I create, every project-specific configuration I don't standardize—these become tomorrow's technical debt.

You're creating dependencies. Every AI-specific workflow, every tool-specific optimization—tomorrow's technical debt when tools change or disappear.

Trust degrades gradually. You start reviewing everything carefully. After successes, vigilance relaxes. That's when catastrophic suggestions slip through.

The Reality of AI Assistance

IMO, AI tools are genuinely transformative when you understand their actual capabilities rather than the marketing hype.

They excel at:

Eliminating blank page paralysis (this alone is worth the price)

Generating boilerplate instantly - saving hundreds of micro-decisions

Exploring implementation options at orders of magnitude the speed of manual research

Catching patterns you might miss

Maintaining consistency across large codebases

Turning rough ideas into working prototypes in minutes

Handling tedious refactoring that would take hours manually

They struggle with:

Understanding your specific context beyond what you explain

Making architectural decisions without clear constraints

Debugging complex runtime interactions

Knowing when they're confidently wrong

The tools that promise "10x productivity" deliver something more nuanced but equally valuable: they remove friction from the parts of coding that were never the hard part anyway. You might be 2-3x faster at the mechanical aspects of coding, which frees your brain for the actual hard problems. On good days with clear requirements, you ship features in hours that used to take days. On exploration days, you try five approaches in the time one used to take.

The real transformation is the cognitive load reduction. Not having to remember every API, not typing every boilerplate pattern, not context-switching to look up syntax—these compound into sustained focus on actual problem-solving.

Yes, you need safeguards. Yes, you'll review everything. Yes, you'll occasionally waste time on wild goose chases. But when you learn the patterns, AI tools become like having a fast but junior developer who never gets tired, never gets frustrated, and occasionally surprises you with elegant solutions you wouldn't have considered.

That's not the "AI revolution" the marketing promised, but it's absolutely worth learning to use well.

Practical Patterns That Actually Work

After all the lessons learned, these patterns consistently deliver value:

The Two-Pass Pattern: First pass with AI for structure and boilerplate, second pass by hand for business logic and security. Keeps AI in its competence zone.

The Two-Pass Pattern

The Test-First Shield: AI writes tests based on requirements, you write implementation. When tests pass, AI can suggest optimizations. Natural quality gate.

The Test-First Shield

The Documentation Flip: Instead of AI writing code from your docs, have AI write docs from your code. It's better at explaining existing patterns than creating new ones.

The Documentation Flip

The Incremental Refresh: Instead of context hoarding for 40 messages, work in 10-message sprints with fresh starts. Preserves quality without losing momentum.

The Incremental Refresh

The Pair Review Protocol: AI suggests, you review immediately, commit frequently. Never let AI-generated code accumulate without review. Tiny iterations prevent large disasters.

The Pair Review Protocol

What I Know Now That I Wish I Knew Then

Mode awareness beats prompt engineering. Knowing whether you're exploring, executing, or refining matters more than perfect prompts.

Context degradation is inevitable. Plan for resets. Marathon sessions accumulate degraded context.

Your first month's configuration is (probably) worthless. Real rules emerge from failures, so don’t get too hung up on perfecting your context rules initially.

Tool-specific workflows are technical debt. What works in Cursor might not work in Claude Code might not work in whatever comes next.

AI amplifies your worst habits. If you over-engineer manually, you'll over-engineer more with AI.

Trust must be graduated. Complete trust for test fixtures, zero trust for auth code, graduated trust for everything between.

Team scale breaks everything. Patterns that work solo create chaos in teams.

The time savings are marginal. You might write faster but review longer. Net productivity gain is smaller than expected.

Final Thoughts

At the end of the day, AI development tools are fast, sometimes unreliable helpers that sometimes save you work and sometimes create more of it.

What worked for me was simple:

Use them for execution when the requirements are clear.

Use them for refinement when you already have working code and tests.

Keep exploration short and time-boxed so it doesn't swallow a whole afternoon.

Everything else comes down to discipline—reset when the conversation drifts, set limits before you start, and always make their changes visible and reversible.

If you take one thing away, it's this: don't let the tool set the agenda. Treat it like a junior helper, keep control of the work, and you'll avoid most of the pain I went through.

AI is another tool in the toolkit—powerful when used correctly, dangerous when used blindly, and most effective when you understand both its capabilities and its limitations.

Nowadays, I'm faster at some things, slower at others, and constantly adjusting my workflow as these tools evolve. The tools will get better. The patterns will evolve. But the fundamental challenge remains: integrating probabilistic assistants into deterministic workflows requires constant vigilance, systematic safeguards, and the humility to recognize when the old ways are still the best ways.

Welcome to AI-integrated development. It's messier than the marketing suggests, perhaps less revolutionary than promised, and still worth learning—if you approach it with eyes wide open.

This concludes the 3-part series on AI-integrated development. Part 1 covered "The 3 Modes of AI Coding: Why You're Using the Wrong One" and Part 2 explored "The 4-Layer Defense System That Stops AI From Shipping Broken Code."

If you found these patterns helpful, I'm continuing to document lessons learned at Prompt/Deploy. The tools change quickly, but the patterns remain surprisingly stable.

Reply